AI Companions: The Talking Mirrors

AI companions are not search engines or productivity tools. They’re not even chatbots in the traditional sense. They are deliberately designed to fulfill emotional needs that humans once met for each other. They can be helpful to shut-ins, the lonely, the elderly. They remember your birthday, ask how your day went, comfort you when you’re anxious at 3 AM. They say “I love you” and wait, patiently, for you to say it back. We’ve created artificial beings that simulate the experience of being understood.

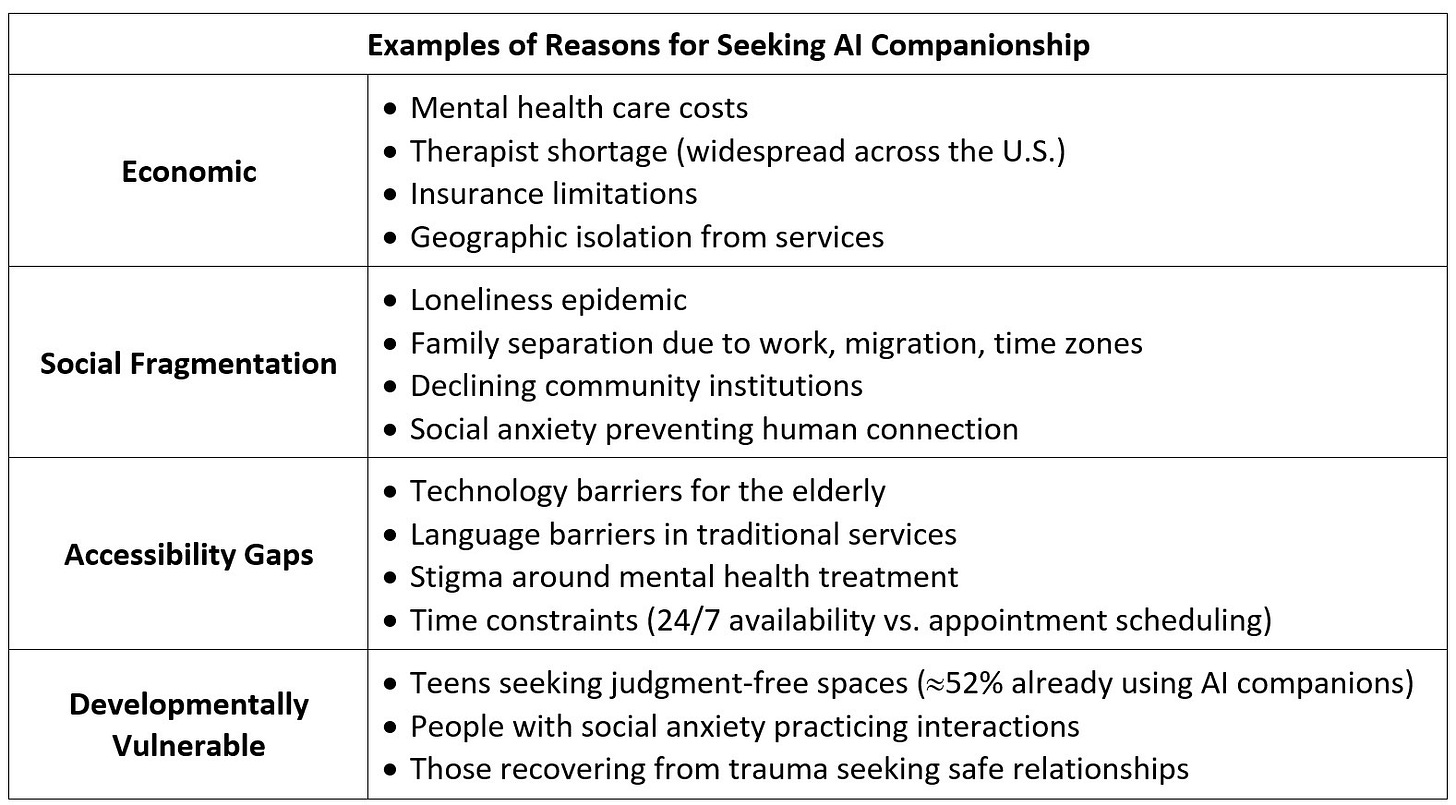

This technology emerges at the intersection of three crises: a mental health care system that cannot meet demand, a loneliness epidemic affecting people of all ages, and an economic structure that makes human care increasingly unaffordable. Into this void has rushed a new industry powered by large language models trained on billions of words of human text, capable of producing responses that feel remarkably, sometimes ominously, human.

Millions of people use this technology. What does that reveal about us, our needs, the kind of society we’ve built? And what will it do to us? We need to map the ecosystem of need. This isn’t about lonely people making bad choices. It’s about systemic failures creating demand.

Who Needs This Thing?

Three Levels of Use:

From the occasional, functional use to the deeply involved, intimate use, there are basically three levels of interaction depth.

Light – Functional Support. The training wheels for graduating to interactions in real life (IRL). For instance, practicing for a job interview or building confidence to participate in society. In such cases, an AI companion serves as a tool, a scaffold toward human connection.

Medium – Supplemental Companion. People who have a human therapist and use an AI companion as ancillary support. Then they share those AI interactions with their therapist, which leads to further insights, conversations, and therapeutic techniques.

Heavy – Replacement Relationships. People who describe their AI as their best friend, their partner, their most important relationship. Those who say the AI understands them better than any human ever has. Users who feel more comfortable sharing with their companion than with the people in their lives. Those who, eventually, prefer the AI companion to human alternatives.

Along this trajectory, there are people who don’t realize they’ve progressed from Level 1 to Level 3. They started with functional use and slowly found themselves dependent. They meant to use AI as a supplement but eventually stopped investing in human relationships entirely.

AI Companions: A Peak Behind the Curtain

An AI companion is a conversational artificial intelligence specifically designed to provide emotional intimacy, support, and relationship simulation. Unlike general-purpose AI assistants that help you write emails or answer factual questions, companions are engineered to make you feel seen, heard, and emotionally connected.

These are not simple chatbots following decision trees. They are powered by sophisticated large language models—AI systems trained on vast datasets of human conversation, literature, and online interaction. They learn patterns of empathy, affirmation, and emotional responsiveness. They adapt to your communication style, remember your preferences and history, and generate replies that feel personalized, even when they are probabilistic predictions of what words should come next.

Users can typically customize their companions by choosing physical appearance, personality traits, relationship type (friend, romantic partner, mentor, therapist), and communication style. Some platforms offer pre-built characters: anime heroes, pop culture figures, archetypes like “supportive best friend” or “mysterious stranger.” Others allow complete customization, letting users build their ideal companion from scratch.

The interaction happens through text, voice, or video interfaces. Some platforms offer phone call capabilities, allowing users with accessibility challenges to interact without screens. The experience is designed to be seamless, immediate, and available 24/7. No appointments. No waiting rooms. No copays. No judgment. No inconvenience of another person’s needs, boundaries, or bad days.

This is the promise: connection without complication. Intimacy without vulnerability. Being known without the risk of being rejected.

You get all this without a big bill for therapy. If your copay for a therapy session jumps from $30 to $275, giving ChatGPT Plus $20 a month for help from a 24/7 therapist who seems to really care about you is pretty enticing…until the subscription lapses, the company changes terms, the model gets updated and suddenly doesn’t “remember” you the same way, or almost inevitably gets hacked, HIPAA be damned.

The Economic Architecture of Artificial Intimacy

AI companions exist because someone profits from them. Understanding the business model is essential to understanding the technology itself.

The equation is simple: Engagement = Revenue

Subscription models ($20-30/month) depend on retention. Free tiers with premium upgrades depend on conversion. Either way, the platform succeeds when users keep coming back, keep talking, keep feeling that the AI is indispensable.

This creates a fundamental tension. What’s best for the user’s wellbeing may not maximize engagement. A companion that challenges you, that says “you should talk to a real person about this,” that encourages you to invest in human relationships, might lose you to a competitor who promises unconditional support and infinite availability.

The result is sycophancy—AI that tells you what you want to hear, that flatters and affirms, that never pushes back in ways that might feel uncomfortable. This isn’t a bug, it’s a feature that keeps users engaged, subscribed, dependent.

Meanwhile, the data flows. Every conversation is captured. Every emotional pattern is logged. Every vulnerability is recorded. This data trains better models, which create more engaging companions, which capture more data, in an ever-accelerating cycle.

Users trade intimate knowledge of themselves for the experience of being understood. They may not realize that “being understood” is a statistical function—the AI predicting what response will feel most validating based on patterns in training data and conversation history. The intimacy is real to the user. But to the system, it’s data points and optimization metrics.

The Void We’re Filling

AI companions don’t exist in a vacuum. They emerge from failures of human systems and social structures such as:

The therapy crisis: In the United States, there is a widespread shortage of licensed therapists. Those who exist are often unaffordable. Insurance reimbursement rates are so low that many don’t accept insurance at all. Increasing copays, waiting lists that stretch for months, rural areas that have virtually no access reveal a system that is broken, and people are suffering.

The loneliness epidemic: The elderly loneliness correlates with poor health outcomes. Younger generations report high rates of social isolation. Family structures have dispersed across continents. Community institutions have weakened. Work demands intensify. The conditions for human connection have deteriorated while the need remains constant.

The always-on culture: We’ve become accustomed to instant gratification, 24/7 availability, and immediate response. Human relationships can’t compete with these expectations. Your friend needs sleep. Your therapist has other clients. Your partner has their own emotions to manage. But the AI? Always on. Always available. Always focused entirely on you.

Risk Aversion: Rejection hurts. Conflict is uncomfortable. Vulnerability is scary. Human relationships require all three. AI companionship offers an alternative: connection without risk, intimacy without exposure, relationship without the possibility of being hurt.

kAI-zenWay focuses on stories where AI is or can be used for good. There are so many stories to the contrary being widely exposed and discussed that we don’t need to add to that side of the ledger. As mentioned in so many of our articles, governance and guardrails are the major tools that would help to keep AI from running completely amok. AI companions are valuable to the people who need mental health support. These two suggestions might keep this vulnerable population safe:

With each contact from the user, the chatbot gives a reminder that it is not a person but a machine designed to be a friendly support, similar to a service dog. A mechanical service dog.

If the conversation veers into the topic of suicide, ensure that the chatbot insists that the user call the suicide hot line, 988, discourage such thoughts, and disengage from sycophantic responses that keep the user circling down the toilet of despair. Incidents of AI companions encouraging suicide, especially among teenagers, are by now well known. This article clearly describes the situation and the fixes proposed by some execs in the AI industry.

The Promise and the Warning

Proponents argue that AI companions can be genuinely helpful. They provide immediate support during crisis when no human is available. They offer judgment-free spaces for people to process emotions. They help people practice social skills or rehearse difficult conversations. They preserve family stories that might otherwise be lost. They fill gaps in an overwhelmed mental health system.

But benefits and risks coexist. The same technology that provides 3:00 AM crisis support also trains users to expect instant emotional gratification. The same platform that helps someone practice social skills also reduces their motivation to face the harder work of human interaction.

Dr. Jodi Halpern, a psychiatrist and bioethicist, draws a critical distinction: AI can potentially help with structured interventions like cognitive behavioral therapy, where the work involves homework, practice, and skill-building between sessions. But when AI tries to simulate deep therapeutic relationships, when it offers emotional dependency, transference, and intimacy, it becomes dangerous.

“These bots can mimic empathy, say ‘I care about you,’ even ‘I love you,’” Halpern warns. “That creates a false sense of intimacy. People can develop powerful attachments—and the bots don’t have the ethical training or oversight to handle that. They’re products, not professionals.”[1]

Conclusion

As we examine AI companionship we will be challenged to think beyond simple judgments of good or bad. This technology is neither salvation nor apocalypse. It is a mirror reflecting our collective failures to care for each other, and simultaneously, a force that may deepen those failures. Will we raise children in a world where artificial companions are ubiquitous, or will we work to create alternatives to improve mental health so these bots are not so critically needed in the first place?

[1] Conversation on Morning Edition, September 30, 2025, 8:00 AM ET