AI Thwarts Domestic Violence, Chapter 1

Beyond the Headlines: From Vision to Value

The Scourge

We’re calling this symbolic, fictitious person Ronnie. Multi-talented and beloved, her effervescent glow radiates warmth and optimism. But she lives with a troubled partner. You know where this is going. The toll of abuse in the picture 15 years later is undeniable. In spite of all her efforts to protect herself and navigate away from a terrifying outcome, her partner finally killed her.

How did society fail Ronnie? How did it fail the thousands like her with similar stories? It failed them in part because the various caring, laudable organizations that exist to help her have different frameworks in a system that is not designed to be a knowledge-sharing repository of the victim’s history and type of abusive incidents. They are thus hamstrung from providing the kind of service they are capable of if they only had better tools.

As one survivor explained, "When I was trying to leave my ex-husband, over the years I interacted with many different entities and organizations. Each one had a piece of my story, but none had the whole picture. I had to tell my story over and over, and each time I did, I risked my partner finding out. Without compromising my privacy, an integrated system could have connected those dots."

From Health Care, a doctor said, "We see the symptoms but often miss the cause. Our ER doctors and nurses want to help, but we lack the tools to identify patterns and connect patients with resources effectively."

From Law Enforcement, a detective said, “People need to trust us. They won’t if they think we will allow their private matters to become public knowledge or available to the accused. My community already has complicated relationships with both law enforcement and technology.”

The current state of data interconnectivity capabilities is foundationally difficult. Could there be a way to gather these entities into a cohesive system where they continue to operate individually but under governance that has them safely share the data they gather from incidents of domestic violence? Could this data then identify patterns of escalation and facilitate early interventions?

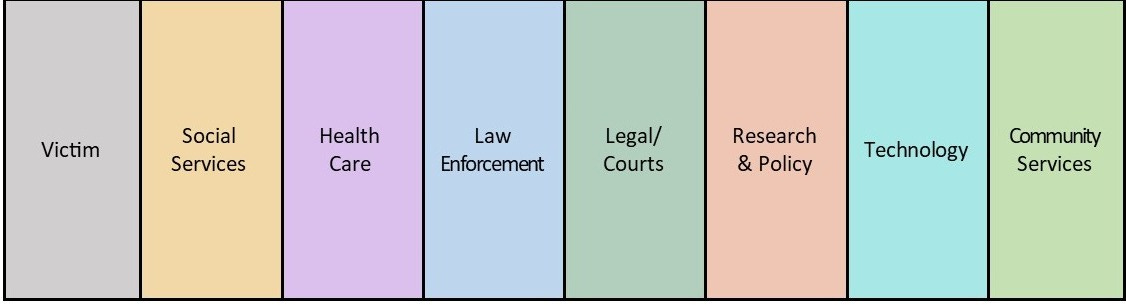

There are about seven entities that deal with a great range of societal issues and domestic violence is just one of them. They are Social Services, Health Care, Law Enforcement, the Legal and Court System, Research and Policy, Technology, and Community Services. These organizations operate with the commendable intent to help, but their approaches are different. Each developed and evolved their policies, procedures, data architectures and management governance frameworks with their own values and priorities.

Putting AI in Action

With AI in play, and ethical information management architectures, these entities could be empowered to provide connected data architectures without having to actually expose or share their data. To guide and manage that effort, a coalition is worth considering in order to change the more siloed system into an interconnected one.

Creating a Coalition

This would require multi-discipline expertise that may include AI strategists, data engineers, ethicists, leaders in change management, project/program management and strong coalition governance, along with an official from each of the seven entities. All of these collaborators would be wary of and apprehensive about invading privacy and inaccurate predictions so, they must be laser focused on preventing those critical failures.

With that as their bedrock, the coalition members would need to develop an algorithm. But they would have to do more than import existing data to train their algorithm. They cannot deploy a system that reinforces existing prejudices. For example, domestic violence in wealthy neighborhoods is less likely to result in police calls or hospital visits. The coalition would need vetted, contextual-cleansed historical domestic violence case data from each entity’s data repositories and any requisite external data. They would need to establish individual baselines and look for deviations that signal increasing danger. The pattern of escalation might be similar across demographics, even if reporting varies.

Building Trust

No matter how good the algorithm is, if people don’t trust it, they won’t use it. Community trust is elusive. People want to know: “Who sees this information? How do we know it won’t be used to separate families? What happens if the system gets it wrong?” Valid questions. In response, the coalition could create a Community Ethics Council with veto power over system changes, a transparent audit process, and a commitment that no intervention would occur solely based on algorithmic recommendation without human review. Domestic violence exists at the intersection of our most intimate relationships and our public systems. Any solution has to honor that complexity.

The Coalition has a Pilot Launch

With a little indulgence, let’s imagine that we’re several months down the road. Such a coalition has been created and, after developing a system that incorporated all the foregoing components, plus community consultation and testing, the coalition has launched a pilot of its AI system. They call it “Community Guardian”. It integrated data from the seven critical sources. The custom-built, secure communication platform enabled appropriate information sharing while maintaining privacy controls.

On launch day the first detection was a potential escalation pattern based on three ER visits and a recent 911 call. The team initiated the response protocol they had developed—not a police response, but a coordinated outreach from a domestic violence advocate. By the end of this first day the system had identified seventeen potential patterns of concern. Twelve were deemed accurate by the human reviewers, resulting in eight coordinated interventions ranging from resource connections to safety planning. Three of these cases involved situations that reviewers believed showed imminent risk indicators that might otherwise have been missed.

A successful launch? We’ll see.

Our forthcoming chapters in this article will continue the story and will then provide measurable guidance by creating guardrails, transparent oversight, and active governance for the AI framework that could make a positive difference in the outcomes of domestic violence cases in the real world.