Strategy and Architecture, Continued

Part 1 of this chapter covered Building a Multi-Modal System, Platform Layered Architecture, Stakeholder Involvement, and Cultural and Societal Elements. Now we’ll cover Change Managements, Training and Capacity Building and Success Measures. Part 3 will consider Challenges, and provide an Implementation Roadmap.

GOVERNANCE and STAKEHOLDER Management

Effective GOVERNANCE requires structured processes that balance innovation with responsibility. This balance is particularly crucial in a domain where both technological shortcomings and unchecked advancement can result in serious harm.

Ethical Oversight Committee:

Multi-stakeholder representation including survivors

Regular review of system performance and outcomes

Authority to recommend system modifications

Transparency reporting responsibilities

Data Governance Council:

Clear data ownership policies

Usage limitations and consent management

Privacy protection standards

Data retention and destruction protocols

Algorithmic Accountability Process:

Regular bias testing and mitigation

Performance auditing across demographic groups

False positive/negative impact assessment

Independent verification of system operation

Stakeholder Engagement Structure:

Regular feedback channels from all stakeholder groups

Clear escalation paths for concerns

Collaborative development processes

Transparent decision-making documentation

Each STAKEHOLDER group will have different expectations that must be managed. Managing expectations isn't just about communication—it's about governance structure, implementation approach, and evaluation frameworks. Each stakeholder group brings legitimate but sometimes conflicting expectations that must be harmonized for successful implementation.

Law Enforcement Expectations:

System reliability and evidential standards

Integration with existing workflows

Clear jurisdiction and responsibility delineation

Resource implications for increased early detection

Victim Expectations:

Privacy and security guarantees

Control over their own data and case progression

Realistic expectations about system capabilities

Clear understanding of human vs. AI components

Social Service Provider Expectations:

Workload impact clarity

Resource needs for responding to system alerts

Training requirements and timelines

Performance measurement standards

Community Expectations:

Transparency in system operation

Evidence of effectiveness and fairness

Cultural appropriateness guarantees

Accessibility across digital divides

Change Management

A phased change management program might include:

Phase 1: Foundation Building

Stakeholder mapping and engagement planning

Current state assessment of existing systems

Data sharing agreement development

Initial technology proof-of-concept

Ethical framework establishment

Phase 2: Pilot Implementation

Limited deployment with intensive monitoring

Selected stakeholder training and integration

Baseline data collection for comparison

Rapid iteration based on initial feedback

Governance structure establishment

Phase 3: Expansion and Integration

Geographic and stakeholder expansion

Deep integration with existing systems

Comprehensive training programs

Community awareness campaigns

Continuous improvement processes implementation

Phase 4: Sustainability and Evolution

Long-term funding structure development

Ongoing training and capacity building

System adaptation to emerging needs

Research and outcome publication

Technology refresh planning

Training and Capacity Building

Successful implementation requires an exceptionally comprehensive training strategy due to the sensitivity, complexity, and high-stakes nature of this application. The training strategy should include prioritizing safety and ethics due to the vulnerable population being served. In addition, training must include stakeholder domain specific knowledge integration, context awareness and sensitivity, and multidisciplinary evaluation metrics.

For Technical Users:

System operation and maintenance training

Alert response protocols

Data interpretation guidelines

System limitation awareness

Troubleshooting capabilities

For Service Providers:

Integration with existing workflows

Risk assessment interpretation

Appropriate intervention selection

Documentation requirements

Privacy and security protocols

For Community Members:

System awareness and capabilities

Appropriate usage scenarios

Privacy protection understanding

Feedback submission processes

Setting realistic expectations such as:

Cultural Appropriateness: Systems that respect community values and practices

Accessibility: Tools usable across different languages, abilities, and resources

Community Control: Influence over how systems operate in their communities

Trust Preservation: Technology that doesn't undermine community relationships

Resource Enhancement: Additional capacity rather than redirection of scarce resources

Historical Awareness: Recognition of past institutional harms and traumas

Value Alignment: Systems that reinforce rather than contradict community values

Success Measurement

The development and deployment of this AI system necessitates a comprehensive and multidisciplinary approach to measuring success. Without a robust evaluation framework, the system risks not only ineffectiveness, but also potential harm to the vulnerable populations it aims to serve. The unique ethical implications of AI involvement, coupled with the multidimensional definition of success, the evolving nature of abuse dynamics, the spectrum of stakeholder accountabilities, and the absolute need to prevent algorithm bias necessitate prioritizing the development of a comprehensive, sustainable, agile success measure framework.

Quantitative Assessment

Short-Term Process Metrics:

System accuracy (sensitivity/specificity)

Response time improvements

User adoption rates

Stakeholder satisfaction scores

Resource connection success rates

Data quality metrics

Medium-Term Impact Metrics:

Early intervention rate increases

Reduction in repeat incidents

Service utilization improvements

Protective order compliance rates

Case clearance improvements

Resource utilization efficiency

Long-Term Outcome Metrics:

Reduction in severe violence incidents

Decreased domestic homicide rates

Improved prosecution outcomes

Reduced recidivism

Economic impact assessment (healthcare costs, productivity)

System trust indicators

Generational impact measures

Qualitative Assessment

Beyond quantitative metrics, qualitative assessment should include:

Survivor experience research

Stakeholder perception studies

Case studies of system successes and failures

Community impact narratives

Cultural appropriateness evaluation

Ethical impact assessment

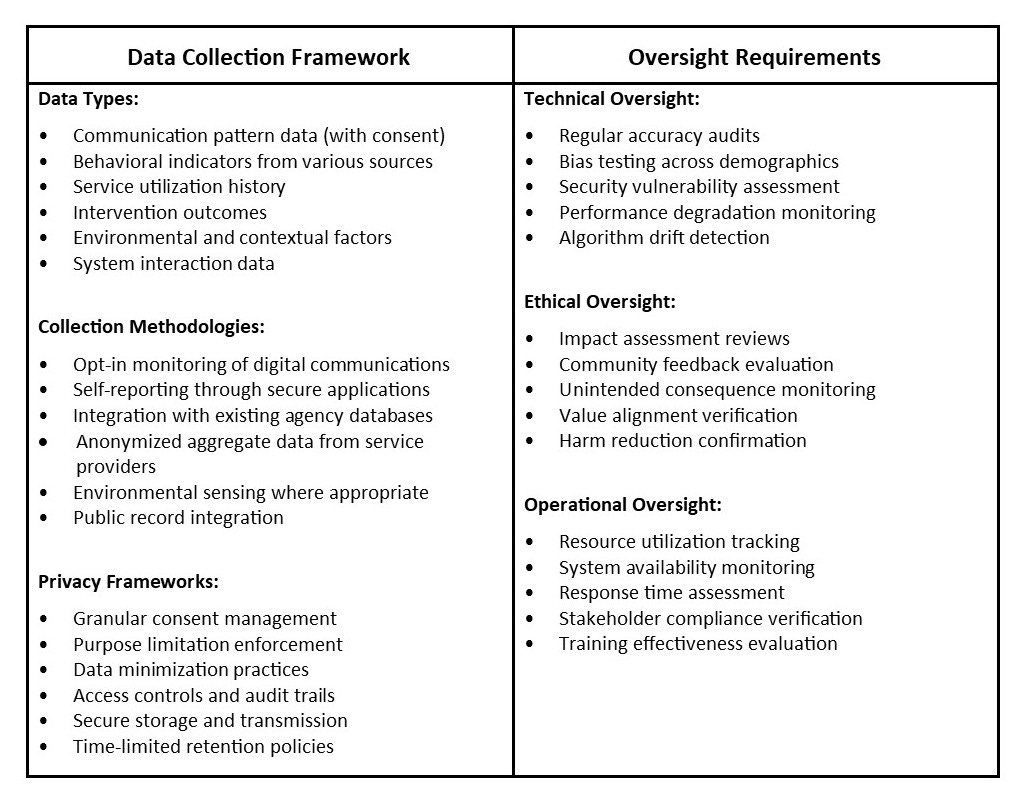

Data Collection and Oversight Requirements

Additional Considerations

Beyond the social, ethical, technical, governance, policy, procedure, data, and stakeholder ownership/alignment considerations, several additional key factors will determine the ultimate success of AI’s contribution to domestic violence solutions. In summary, these would include:

Trust Building:

Demonstrated system reliability

Transparent operation and decision-making

Visible community involvement

Consistent privacy protection

Proven effectiveness over time

Resource Adequacy:

Sufficient intervention capacity

Technical infrastructure investment

Trained personnel availability

Sustainable funding mechanisms

Comprehensive support services

Integration Effectiveness:

Seamless workflow incorporation

Minimal duplicate data entry

Consistent information sharing

Coordinated response protocols

Compatible technical standards

Adaptability:

Evolving threat pattern recognition

Changing social context awareness

New technology incorporation

Emerging stakeholder needs accommodation

Continuous learning implementation

Participatory Evaluation Design:

Success metrics should be developed with input from domestic violence survivors, advocates, and frontline service providers. Their lived experience and professional expertise are essential for creating meaningful measures that reflect real-world priorities rather than technical abstractions.

Ethical Data Collection - Collecting outcome data must be balanced with safety and privacy concerns:

Minimize re-traumatization through careful data collection protocols

Ensure absolute confidentiality and security of sensitive information

Use trauma-informed approaches to evaluation

Offer genuine value to participants in return for their contribution

Provide clear opt-out mechanisms without penalty

Contextual Interpretation - Metrics must be interpreted within their proper contexts:

Lower usage in certain communities might reflect technological barriers rather than less need

Short-term increases in reported violence might actually represent improved disclosure rather than system failure

Successful outcomes look different across cultural contexts and abuse types

Independent Oversight - To maintain objectivity, success measurement should include independent evaluation components:

External audits by domestic violence experts

Ethical review of measurement practices

Transparent reporting of both positive and negative findings

Regular reassessment of the measurement framework itself

Continuous Refinement - The measurement framework should evolve based on:

Emerging research on domestic violence dynamics

Technological developments

User feedback and experiences

Identified gaps or biases in existing metrics

Changes in legal and social service landscapes

Part 3, the final part of Chapter 3, will cover Challenges and provide an Implementation Roadmap.